Issue No. 38

This week’s issue explores something I’ve become increasingly aware of in our current climate—how easy it is to draw the wrong conclusion or make a bad decision when we only consider the things we see.

Three things happened recently

A company downtown wanted to know if their employees were happy. They sent out a survey, people responded, and the results looked something like the graph below. A few comments suggested areas for improvement, but on the whole employees were happy and sentiment overall was positive.

The company decided that morale was therefore good and no intervention was necessary.

Anything particularly wrong with that conclusion or is it a reasonable one?

A few miles north, a race car team was deciding whether to participate in a major tournament over the weekend. The stakes were high—winning would mean huge prize money, but losing would mean the team would probably get dropped by their sponsor given the team’s recent streak of engine troubles.

A mechanic on the team reminded everyone that the weather would be cold on race day (32 °F / 0 °C). And historically, they tended to have more engine failures in colder weather.

To validate that hunch, the head mechanic analyzed the data on engine failures and temperature and shared the results with everyone. In all the races where they didn’t finish a race, there was no connection between those two things—failures could happen at any temperature.

And so, the team decided, the race would go ahead.

Anything particularly wrong with that conclusion?

A few miles south, a little startup was scratching their heads trying to figure out why they kept losing drones. They’d been trying to get a food-delivery-by-drone business off the ground for several months, but vandals kept throwing rocks at their drones causing them to crash to the ground. Many drones were lost that way, not to mention many a boba tea with extra sugar.

The CTO called everyone into a conference room and shared a slide of one of their drones. It had red dots to indicate all the locations where drones had been hit. “Given our drones have shown damage in these spots—here, here, and here; the four arms, basically—I propose we reinforce those arms to avoid losing as many drones as we do.”

The CEO nodded and agreed that it was a reasonable plan.

How about that conclusion?

Anything particularly wrong with it?

One common blindspot

All three examples have one thing in common. In all three, a decision-maker unknowingly based their conclusion on the part of the data they could see.

With the first example, the company learns what employees who filled out the survey think. They’re pretty happy. But they wouldn’t be able to tell you what employees who didn’t fill out the survey think. They could all be so distraught, demoralized, and cynical that they chose to dismiss the survey altogether.1

With the second example, the team knows what the temperature was during races that had engine failures. But how about races that didn’t have engine failures? What if the head mechanic were to plot those races too? They would have reached a different conclusion on account of their understanding changing—failed races happen at all temperatures, but successful races always happen above 50 °F.

With the third example, the team knows what locations get hit the most in drones that come back or are recovered. But what about the locations that get hit in drones that don’t come back? Surely, those are more critical to patch since they cause a drone to crash. The ones that come back, damaged though they are, survive.

These things happen all the time

While these particular examples are made up, they’re based on actual events. The first example is from first-hand experience. The second example is drawn from the Challenger space shuttle explosion, caused by O-rings that failed on launch day due to cold weather.2 And the third example is drawn from WWII when a stats team at Columbia made a recommendation to the US Navy about how best to reinforce their aircraft.

The bullet holes in the returning aircraft represented areas where a bomber could take damage and still fly well enough to return safely to base. Therefore, [they] proposed that the Navy reinforce areas where the returning aircraft were unscathed, inferring that planes hit in those areas were the ones most likely to be lost.3

Three types of selection bias

This tendency to sometimes not quite see the whole picture and to use what we know about part of a population—the part we can see—to form an opinion or to reach a conclusion about the whole population is called selection bias. It skews our understanding of reality.

We don’t consider the silent employees. We don’t consider the races that didn’t fail. We don’t consider the drones that didn’t make it back.

Non-response. Sometimes, selection bias is caused by non-response, as we saw with the survey example. We’ll survey all people, only a subset responds, a conclusion is drawn without considering those who didn’t respond.

Sampling. Sometimes, it’s caused by sampling, as we saw with the race car example. We’ll look at races where engine failure occurred, then only consider those when determining if there’s an association between engine failure and temperature.

Survivorship. Sometimes, it’s caused by survivorship, as we saw with the drone example. We’ll look at drones that came back and draw a conclusion from their damaged state.4

Intentional selection bias

**This section contains spoilers**

I watched a show called Adolescence with my daughter this past week. In no small part because of Stephen Graham, I’ll admit. I thought it was excellent. Central to the story is a boy who may or may not have committed the crime of murder. Adamant that he’s innocent at the outset, but then as the show develops we find out there’s more to the story.

My daughter and I were at a cafe two days ago and she brought up the question of whether distorting our memories is an example of selection bias. And it got me thinking about how interesting a framing that is.

We’re sometimes so moved by wishful thinking, or by a need to block out a horrible memory of something we’ve done that we distort reality. It’s as though there’s a bank of latent realities in our minds, one of which we hope is true, and we pick it if we don’t want to deal with actual reality. I see that a lot with politicians and in media—in how they portray the world to us.

What’s the long and short of it?

Ask about what’s left unsaid, who’s left unseen, what’s left uncovered. It can be tempting to take the visible as the totality of reality. As the full picture. But often reading between the lines or peeking behind a certain framing can help sharpen our understanding of the world.

A cover is not the book

So open it up and take a look

’Cause under the covers one discovers

That the king may be a crook

Chapter titles are like signs

And if you read between the lines

You’ll find your first impression was mistook

For a cover is nice

But a cover is not the book—Mary Poppins Returns

I like to imagine everyone I meet in the world is secretly writing a memoir. And that it would be a shame to be a villain in someone else’s story.

Until next time.

Be well,

Ali

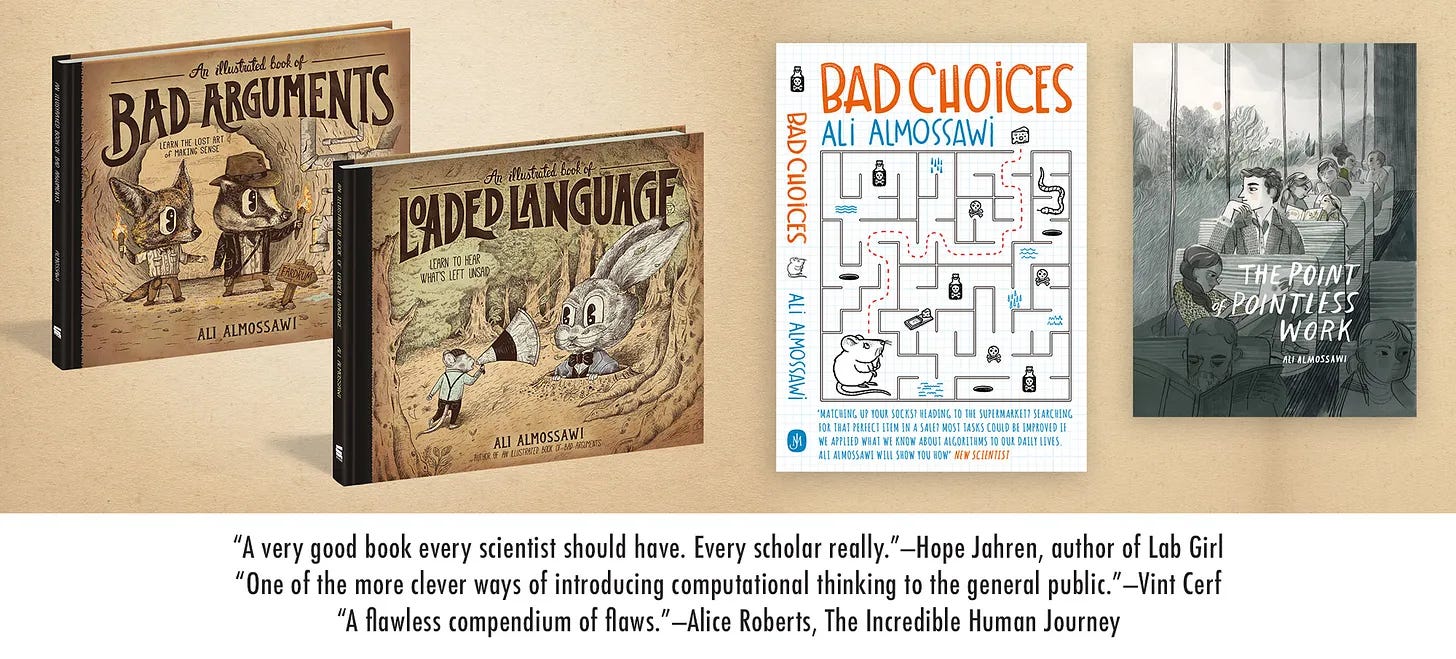

P.S. Check out the last issue in case you missed it, where I talk to Karen Giangreco about book publishing. I’ll be doing more podcast-style issues later in the year.

I feel we’re sometimes prone to this tendency with algorithms as well. We’ll look at what bubbles up—at what’s visible—and forget there’s a long tail of things that may be equally worthy of our attention.

There’s a remix of the Challenger space shuttle explosion called the Carter Racing Problem, which I adapted here.

https://en.wikipedia.org/wiki/Survivorship_bias

You’ll see that in other places, like with investing where someone might talk about the picks they got right and maybe not mention the ones they got wrong. Or you’ll see it with companies that want to convince you to join them. YouTube, for instance, might tell you about all the creators who are making a killing, but never mention the long tail of ones who end up not making it.